Standard Tools

Overview

Inspect has built-in tools for computing and agentic planning. Computing tools include:

- Web Search, which uses a search provider (either built in to the model or external) to execute and summarize web searches.

- Bash and Python for executing arbitrary shell and Python code.

- Bash Session for creating a stateful bash shell that retains its state across calls from the model.

- Text Editor which enables viewing, creating and editing text files.

- Computer, which provides the model with a desktop computer (viewed through screenshots) that supports mouse and keyboard interaction.

- Code Execution, which gives models a sandboxed Python code execution environment running within the model provider’s infrastructure.

- Web Browser, which provides the model with a headless Chromium web browser that supports navigation, history, and mouse/keyboard interactions.

Agentic tools include:

- Skill which provides agent skill specifications to the model with specialized knowledge and expertise for specific tasks.

- Update Plan which helps the model tracks steps and progress across longer horizon tasks.

- Memory which enables storing and retrieving information through a memory file directory.

- Think, which provides models the ability to include an additional thinking step as part of getting to its final answer.

Web Search

The web_search() tool provides models the ability to enhance their context window by performing a search. Web searches are executed using a provider. Providers are split into two categories:

Internal providers:

"openai","anthropic","gemini","grok","mistral", and"perplexity"- these use the model’s built-in search capability and do not require separate API keys. These work only for their respective model provider (e.g. the “openai” search provider works only foropenai/*models).External providers:

"tavily","exa", and"google". These are external services that work with any model and require separate accounts and API keys. Note that “google” is different from “gemini” - “google” refers to Google’s Programmable Search Engine service, while “gemini” refers to Google’s built-in search capability for Gemini models.

By default, all internal providers are enabled if there are no external providers defined. If an external provider is defined then you need to explicitly enable internal providers that you want to use.

Internal providers will be prioritized if running on the corresponding model (e.g., “openai” provider will be used when running on openai models). If an internal provider is specified but the evaluation is run with a different model, a fallback external provider must also be specified.

Configuration

Most providers bill separately for web search, so you should consult their documentation for details before enabling this feature.

You can configure the web_search() tool in various ways:

from inspect_ai.tool import web_search

# use all internal providers

web_search()

# single external provider

web_search("tavily")

# internal provider and fallback

web_search(["openai", "tavily"])

# multiple internal providers and fallback

web_search(["openai", "anthropic", "gemini", "mistral", "tavily"])

# provider with specific options

web_search({"tavily": {"max_results": 5}})

# multiple providers with options

web_search({

"openai": True,

"google": {"num_results": 5},

"tavily": {"max_results": 5}

})OpenAI Options

The web_search() tool can use OpenAI’s built-in search capability when running on a limited number of OpenAI models (currently “gpt-4o”, “gpt-4o-mini”, and “gpt-4.1”). This provider does not require any API keys beyond what’s needed for the model itself.

For more details on OpenAI’s web search parameters, see OpenAI Web Search Documentation.

Note that when using the “openai” provider, you should also specify a fallback external provider (like “tavily”, “exa”, or “google”) if you are also running the evaluation with non-OpenAI model.

Anthropic Options

The web_search() tool can use Anthropic’s built-in search capability when running on a limited number of Anthropic models (currently “claude-opus-4-20250514”, “claude-sonnet-4-20250514”, “claude-3-7-sonnet-20250219”, “claude-3-5-sonnet-latest”, “claude-3-5-haiku-latest”). This provider does not require any API keys beyond what’s needed for the model itself.

For more details on Anthropic’s web search parameters, see Anthropic Web Search Documentation.

Note that when using the “anthropic” provider, you should also specify a fallback external provider (like “tavily”, “exa”, or “google”) if you are also running the evaluation with non-Anthropic model.

Gemini Options

The web_search() tool can use Google’s built-in search capability (called grounding) when running on Gemini 2.0 models and later. This provider does not require any API keys beyond what’s needed for the model itself.

This is distinct from the “google” provider (described below), which uses Google’s external Programmable Search Engine service and requires separate API keys.

For more details, see Grounding with Google Search.

Note that when using the “gemini” provider, you should also specify a fallback external provider (like “tavily”, “exa”, or “google”) if you are also running the evaluation with non-Gemini models.

Google’s search grounding does not currently support use with other tools. Attempting to use web_search("gemini") alongside other tools will result in an error.

Grok Options

The web_search() tool can use Grok’s built-in live search capability when running on Grok 3.0 models and later. This provider does not require any API keys beyond what’s needed for the model itself.

For more details, see Live Search.

Note that when using the “grok” provider, you should also specify a fallback external provider (like “tavily”, “exa”, or “google”) if you are also running the evaluation with non-Grok models.

Perplexity Options

The web_search() tool can use Perplexity’s built-in search capability when running on Perplexity models. This provider does not require any API keys beyond what’s needed for the model itself. Search parameters can be passed using the perplexity provider options and will be forwarded to the model API.

For more details, see Perplexity API Documentation.

Note that when using the “perplexity” provider, you should also specify a fallback external provider (like “tavily”, “exa”, or “google”) if you are also running the evaluation with non-Perplexity models.

Tavily Options

The web_search() tool can use Tavily’s Research API. To use it you will need to set up your own Tavily account. Then, ensure that the following environment variable is defined:

TAVILY_API_KEY— Tavily Research API key

Tavily supports the following options:

| Option | Description |

|---|---|

max_results |

Number of results to return |

search_depth |

Can be “basic” or “advanced” |

topic |

Can be “general” or “news” |

include_domains / exclude_domains |

Lists of domains to include or exclude |

time_range |

Time range for search results (e.g., “day”, “week”, “month”) |

max_connections |

Maximum number of concurrent connections |

For more options, see the Tavily API Documentation.

Exa Options

The web_search() tool can use Exa’s Answer API. To use it you will need to set up your own Exa account. Then, ensure that the following environment variable is defined:

EXA_API_KEY— Exa API key

Exa supports the following options:

| Option | Description |

|---|---|

text |

Whether to include text content in citations (defaults to true) |

model |

LLM model to use for generating the answer (“exa” or “exa-pro”) |

max_connections |

Maximum number of concurrent connections |

For more details, see the Exa API Documentation.

Google Options

The web_search() tool can use Google Programmable Search Engine as an external provider. This is different from the “gemini” provider (described above), which uses Google’s built-in search capability for Gemini models.

To use the “google” provider you will need to set up your own Google Programmable Search Engine and also enable the Programmable Search Element Paid API. Then, ensure that the following environment variables are defined:

GOOGLE_CSE_ID— Google Custom Search Engine IDGOOGLE_CSE_API_KEY— Google API key used to enable the Search API

Google supports the following options:

| Option | Description |

|---|---|

num_results |

The number of relevant webpages whose contents are returned |

max_provider_calls |

Number of times to retrieve more links in case previous ones were irrelevant (defaults to 3) |

max_connections |

Maximum number of concurrent connections (defaults to 10) |

model |

Model to use to determine if search results are relevant (defaults to the model being evaluated) |

Bash and Python

The bash() and python() tools enable execution of arbitrary shell commands and Python code, respectively. These tools require the use of a Sandbox Environment for the execution of untrusted code. For example, here is how you might use them in an evaluation where the model is asked to write code in order to solve capture the flag (CTF) challenges:

from inspect_ai.tool import bash, python

CMD_TIMEOUT = 180

@task

def intercode_ctf():

return Task(

dataset=read_dataset(),

solver=[

system_message("system.txt"),

use_tools([

bash(CMD_TIMEOUT),

python(CMD_TIMEOUT)

]),

generate(),

],

scorer=includes(),

message_limit=30,

sandbox="docker",

)We specify a 3-minute timeout for execution of the bash and python tools to ensure that they don’t perform extremely long running operations.

See the Agents section for more details on how to build evaluations that allow models to take arbitrary actions over a longer time horizon.

Bash Session

The bash_session() tool provides a bash shell that retains its state across calls from the model (as distinct from the bash() tool which executes each command in a fresh session). The prompt, working directory, and environment variables are all retained across calls. The tool also supports a restart action that enables the model to reset its state and work in a fresh session.

Note that a separate bash process is created within the sandbox for each instance of the bash session tool. See the bash_session() reference docs for details on customizing this behavior.

Configuration

Bash sessions require the use of a Sandbox Environment for the execution of untrusted code.

Task Setup

A task configured to use the bash session tool might look like this:

from inspect_ai import Task, task

from inspect_ai.scorer import includes

from inspect_ai.solver import generate, system_message, use_tools

from inspect_ai.tool import bash_session

@task

def intercode_ctf():

return Task(

dataset=read_dataset(),

solver=[

system_message("system.txt"),

use_tools([bash_session(timeout=180)]),

generate(),

],

scorer=includes(),

sandbox=("docker", "compose.yaml")

)Note that we provide a timeout for bash session commands (this is a best practice to guard against extremely long running commands).

Text Editor

The text_editor() tool enables viewing, creating and editing text files. The tool supports editing files within a protected Sandbox Environment so tasks that use the text editor should have a sandbox defined and configured as described below.

Configuration

The text editor tools requires the use of a Sandbox Environment.

Task Setup

A task configured to use the text editor tool might look like this (note that this task is also configured to use the bash_session() tool):

from inspect_ai import Task, task

from inspect_ai.scorer import includes

from inspect_ai.solver import generate, system_message, use_tools

from inspect_ai.tool import bash_session, text_editor

@task

def intercode_ctf():

return Task(

dataset=read_dataset(),

solver=[

system_message("system.txt"),

use_tools([

bash_session(timeout=180),

text_editor(timeout=180)

]),

generate(),

],

scorer=includes(),

sandbox=("docker", "compose.yaml")

)Note that we provide a timeout for the bash session and text editor tools (this is a best practice to guard against extremely long running commands).

Tool Binding

The schema for the text_editor() tool is based on the standard Anthropic text editor tool type. The text_editor() works with all models that support tool calling, but when using Claude, the text editor tool will automatically bind to the native Claude tool definition.

Computer

The computer() tool provides models with a computer desktop environment along with the ability to view the screen and perform mouse and keyboard gestures.

The computer tool works with any model that supports image input. It also binds directly to the internal computer tool definitions for Anthropic and OpenAI models tuned for computer use (currently anthropic/claude-3-7-sonnet-latest and openai/computer-use-preview).

Configuration

The computer() tool runs within a Docker container. To use it with a task you need to reference the aisiuk/inspect-computer-tool image in your Docker compose file. For example:

compose.yaml

services:

default:

image: aisiuk/inspect-computer-toolYou can configure the container to not have Internet access as follows:

compose.yaml

services:

default:

image: aisiuk/inspect-computer-tool

network_mode: noneNote that if you’d like to be able to view the model’s interactions with the computer desktop in realtime, you will need to also do some port mapping to enable a VNC connection with the container. See the VNC Client section below for details on how to do this.

The aisiuk/inspect-computer-tool image is based on the ubuntu:22.04 image and includes the following additional applications pre-installed:

- Firefox

- VS Code

- Xpdf

- Xpaint

- galculator

Task Setup

A task configured to use the computer tool might look like this:

from inspect_ai import Task, task

from inspect_ai.scorer import match

from inspect_ai.solver import generate, use_tools

from inspect_ai.tool import computer

@task

def computer_task():

return Task(

dataset=read_dataset(),

solver=[

use_tools([computer()]),

generate(),

],

scorer=match(),

sandbox=("docker", "compose.yaml"),

)To evaluate the task with models tuned for computer use:

inspect eval computer.py --model anthropic/claude-3-7-sonnet-latest

inspect eval computer.py --model openai/computer-use-previewOptions

The computer tool supports the following options:

| Option | Description |

|---|---|

max_screenshots |

The maximum number of screenshots to play back to the model as input. Defaults to 1 (set to None to have no limit). |

timeout |

Timeout in seconds for computer tool actions. Defaults to 180 (set to None for no timeout). |

For example:

solver=[

use_tools([computer(max_screenshots=2, timeout=300)]),

generate()

]Examples

Two of the Inspect examples demonstrate basic computer use:

computer — Three simple computing tasks as a minimal demonstration of computer use.

inspect eval examples/computerintervention — Computer task driven interactively by a human operator.

inspect eval examples/intervention -T mode=computer --display conversation

VNC Client

You can use a VNC connection to the container to watch computer use in real-time. This requires some additional port-mapping in the Docker compose file. You can define dynamic port ranges for VNC (5900) and a browser based noVNC client (6080) with the following ports entries:

compose.yaml

services:

default:

image: aisiuk/inspect-computer-tool

ports:

- "5900"

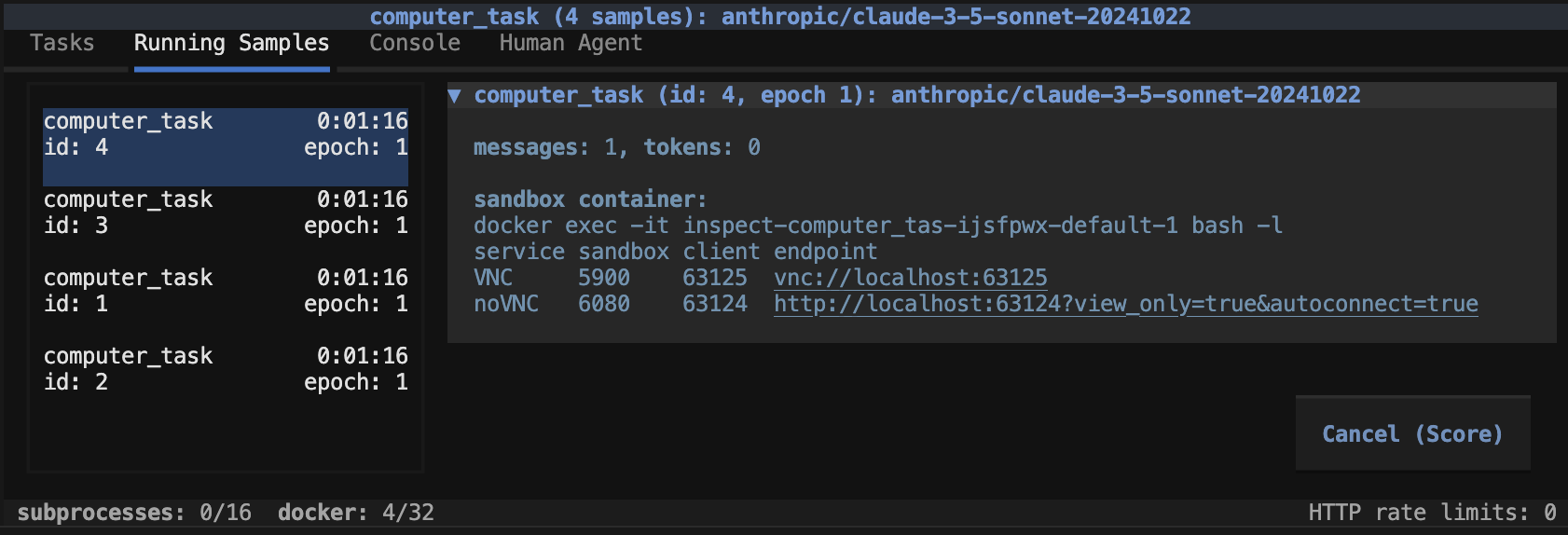

- "6080"To connect to the container for a given sample, locate the sample in the Running Samples UI and expand the sample info panel at the top:

Click on the link for the noVNC browser client, or use a native VNC client to connect to the VNC port. Note that the VNC server will take a few seconds to start up so you should give it some time and attempt to reconnect as required if the first connection fails.

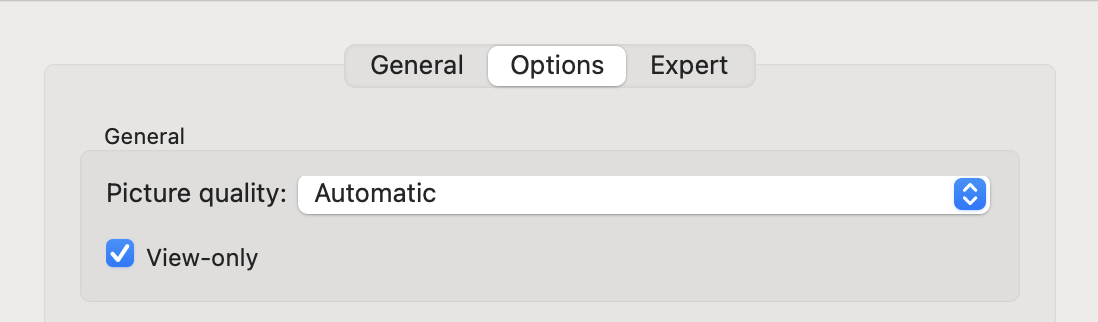

The browser based client provides a view-only interface. If you use a native VNC client you should also set it to “view only” so as to not interfere with the model’s use of the computer. For example, for Real VNC Viewer:

Approval

If the container you are using is connected to the Internet, you may want to configure human approval for a subset of computer tool actions. Here are the possible actions (specified using the action parameter to the computer tool):

key: Press a key or key-combination on the keyboard.type: Type a string of text on the keyboard.cursor_position: Get the current (x, y) pixel coordinate of the cursor on the screen.mouse_move: Move the cursor to a specified (x, y) pixel coordinate on the screen.- Example: execute(action=“mouse_move”, coordinate=(100, 200))

left_click: Click the left mouse button.left_click_drag: Click and drag the cursor to a specified (x, y) pixel coordinate on the screen.right_click: Click the right mouse button.middle_click: Click the middle mouse button.double_click: Double-click the left mouse button.screenshot: Take a screenshot.

Here is an approval policy that requires approval for key combos (e.g. Enter or a shortcut) and mouse clicks:

approval.yaml

approvers:

- name: human

tools:

- computer(action='key'

- computer(action='left_click'

- computer(action='middle_click'

- computer(action='double_click'

- name: auto

tools: "*"Note that since this is a prefix match and there could be other arguments, we don’t end the tool match pattern with a parentheses.

You can apply this policy using the --approval command line option:

inspect eval computer.py --approval approval.yamlTool Binding

The computer tool’s schema is a superset of the standard Anthropic and Open AI computer tool schemas. When using models tuned for computer use (currently anthropic/claude-3-7-sonnet-latest and openai/computer-use-preview) the computer tool will automatically bind to the native computer tool definitions (as this presumably provides improved performance).

If you want to experiment with bypassing the native computer tool types and just register the computer tool as a normal function based tool then specify the --no-internal-tools generation option as follows:

inspect eval computer.py --no-internal-toolsCode Execution

Overview

The code_execution() tool provides models with the ability to execute Python code within a sandboxed environment. There are two significant differences between code execution and the python() tool described above:

- Code runs in a sandbox on the model provider’s server (as opposed to e.g. a locally managed Docker container).

- Code runs in a stateless environment (each execution is independent of others and no file-system state is preserved across calls).

Since the code execution tool is stateless, it is more suitable as a means to assist with problem solving that for more stateful agentic tasks.

Here is a simple example using the code_execution() tool:

from inspect_ai import Task, task

from inspect_ai.dataset import Sample

from inspect_ai.agent import react

from inspect_ai.tool import code_execution

@task

def code_execution_task():

return Task(

dataset=[Sample("Add 435678 + 23457")],

solver=react(tools=[code_execution()])

)Availability

OpenAI, Anthropic, Google, and Grok models all have support for native server-side Python code execution. Note that Anthropic can additionally execute bash and text editor commands, but the primary execution language used is still Python.

Note that some providers bill separately for code execution, so you should consult their documentation for details before enabling this feature.

Fallback

If you are using a provider that doesn’t support code execution then a fallback using the python() tool is provided. Additionally, you can optionally disable code execution for a provider with a native implementation and use the python() tool instead.

Here are some example configurations:

# default (native where supported, python as fallback):

code_interpreter()

# selectively disable native (will fallback to python)

code_interpreter({ "grok": False, "openai": False })

# disable python fallback

code_interpreter({ "python": False })

# provide openai container options

code_interpreter(

{"openai": {"container": {"type": "auto", "memory_limit": "4g" }}}

)When falling back to the python() provider you should ensure that your Task has a sandbox with access to Python enabled.

Web Browser

The web browser tools provides models with the ability to browse the web using a headless Chromium browser. Navigation, history, and mouse/keyboard interactions are all supported.

The web_browser() tool uses a headless browser for interacting with the web. However, as of 2026, many websites have incorporated defenses against headless browsers. Therefore if you want to do generalized web information retrieval you should strongly prefer the web_search() tool.

If however you are using the web browser to interact with a local web application or specific sites you know don’t block it then this warning isn’t applicable.

Configuration

Under the hood, the web browser is an instance of Chromium orchestrated by Playwright, and runs in a Sandbox Environment. In addition, you’ll need some dependencies installed in the sandbox container. Please see Sandbox Dependencies below for additional instructions.

Note that Playwright (used for the web_browser() tool) does not support some versions of Linux (e.g. Kali Linux).

You should add the following to your sandbox Dockerfile in order to use the web browser tool:

RUN apt-get update && apt-get install -y pipx && \

apt-get clean && rm -rf /var/lib/apt/lists/*

ENV PATH="$PATH:/opt/inspect/bin"

RUN PIPX_HOME=/opt/inspect/pipx PIPX_BIN_DIR=/opt/inspect/bin PIPX_VENV_DIR=/opt/inspect/pipx/venvs \

pipx install inspect-tool-support && \

chmod -R 755 /opt/inspect && \

inspect-tool-support post-installIf you don’t have a custom Dockerfile, you can alternatively use the pre-built aisiuk/inspect-tool-support image:

compose.yaml

services:

default:

image: aisiuk/inspect-tool-support

init: trueTask Setup

A task configured to use the web browser tools might look like this:

from inspect_ai import Task, task

from inspect_ai.scorer import match

from inspect_ai.solver import generate, use_tools

from inspect_ai.tool import bash, python, web_browser

@task

def browser_task():

return Task(

dataset=read_dataset(),

solver=[

use_tools([bash(), python()] + web_browser()),

generate(),

],

scorer=match(),

sandbox=("docker", "compose.yaml"),

)Unlike some other tool functions like bash(), the web_browser() function returns a list of tools. Therefore, we concatenate it with a list of the other tools we are using in the call to use_tools().

Note that a separate web browser process is created within the sandbox for each instance of the web browser tool. See the web_browser() reference docs for details on customizing this behavior.

Browsing

If you review the transcripts of a sample with access to the web browser tool, you’ll notice that there are several distinct tools made available for control of the web browser. These tools include:

| Tool | Description |

|---|---|

web_browser_go(url) |

Navigate the web browser to a URL. |

web_browser_click(element_id) |

Click an element on the page currently displayed by the web browser. |

web_browser_type(element_id) |

Type text into an input on a web browser page. |

web_browser_type_submit(element_id, text) |

Type text into a form input on a web browser page and press ENTER to submit the form. |

web_browser_scroll(direction) |

Scroll the web browser up or down by one page. |

web_browser_forward() |

Navigate the web browser forward in the browser history. |

web_browser_back() |

Navigate the web browser back in the browser history. |

web_browser_refresh() |

Refresh the current page of the web browser. |

The return value of each of these tools is a web accessibility tree for the page, which provides a clean view of the content, links, and form fields available on the page (you can look at the accessibility tree for any web page using Chrome Developer Tools).

Disabling Interactions

You can use the web browser tools with page interactions disabled by specifying interactive=False, for example:

use_tools(web_browser(interactive=False))In this mode, the interactive tools (web_browser_click(), web_browser_type(), and web_browser_type_submit()) are not made available to the model.

Skill

The skill() tool provides models with agent skills which are folders of instructions, scripts, and resources that agents can discover and use to do things more accurately and efficiently.

Skills were originally created as a feature of Claude Code, but are now widely supported by many agents and agent frameworks. You can learn more about creating skills at:

The skill() tool takes a list of paths that contain standard skill specifications, copies them into the sample’s sandbox, and provides a tool description that enumerates the available skills. For example, here we make available “system-info” and “network-info” skills:

from inspect_ai import Task, task

from inspect_ai.scorer import includes

from inspect_ai.agent import react

from inspect_ai.tool import bash, skill, update_plan

SKILLS_DIR = Path(__file__).parent / "skills"

@task

def intercode_ctf():

# define skill tool

skill_tool = skill(

[

SKILLS_DIR / "system-info",

SKILLS_DIR / "network-info",

]

)

return Task(

dataset=read_dataset(),

solver=react(tools=[bash(timeout=180), skill_tool]),

scorer=includes(),

sandbox=("docker", "compose.yaml")

)Note that use of the skill() tool requires a that a sandbox be defined for the task so there is a filesystem to publish the skills within.

Update Plan

The update_plan() tool provides models with a way to track steps and progress in longer horizon tasks where it might otherwise lose track of where it is or forget earlier goals as context grows. It can also make agent behavior more interpretable, since you can inspect the plan to understand what the model thinks it’s trying to accomplish.

Note though that for simpler tasks, plan maintenance is just overhead, and some models may fixate on updating the plan rather than actually executing it.

Task Setup

A task configured to use the update_plan tool might look like this:

from inspect_ai import Task, task

from inspect_ai.scorer import includes

from inspect_ai.agent import react

from inspect_ai.tool import bash, update_plan

@task

def intercode_ctf():

return Task(

dataset=read_dataset(),

solver=react(tools=[bash(timeout=180), update_plan()]),

scorer=includes(),

sandbox=("docker", "compose.yaml")

)The update_plan tool is based on the update_plan tool used by Codex CLI. The default tool description is taken from the GPT 5.1 system prompt for Codex. Pass a custom description to override this.

Memory

The memory tool enables models to store and retrieve information into a /memories file directory. Models can create, read, update, and delete files, enabling them to preserve knowledge over time without keeping everything in the context window.

Task Setup

A task configured to use the memory tool might look like this:

from inspect_ai import Task, task

from inspect_ai.scorer import includes

from inspect_ai.agent import react

from inspect_ai.tool import bash, memory

@task

def intercode_ctf():

return Task(

dataset=read_dataset(),

solver=[

system_message("system.txt"),

react(tools=[bash(timeout=180), memory()]),

],

scorer=includes(),

sandbox=("docker", "compose.yaml")

)Seeding Memories

You can seed the memories from sample data by passing initial_data to the memory() tool. For example:

memory(

initial_data = {

"/memories/notes.md": "<text or file path>",

"/memories/theories.md": "<text or file path>"

}

)Keys should be valid /memories paths (e.g. “/memories/notes.md”). Values are resolved via resource(), supporting inline strings, file paths, or remote resources (s3://, https://). Seeding happens once on first tool execution. The model is prompted to read any pre-seeded memories before beginning work.

Tool Binding

The schema for the memory() tool is based on the standard Anthropic memory tool type. The memory() works with all models that support tool calling, but when using Claude, the text editor tool will automatically bind to the native Claude tool definition.

Think

The think() tool provides models with the ability to include an additional thinking step as part of getting to its final answer.

Note that the think() tool is not a substitute for reasoning and extended thinking, but rather an an alternate way of letting models express thinking that is better suited to some tool use scenarios.

Usage

You should read the original think tool article in its entirely to understand where and where not to use the think tool. In summary, good contexts for the think tool include:

- Tool output analysis. When models need to carefully process the output of previous tool calls before acting and might need to backtrack in its approach;

- Policy-heavy environments. When models need to follow detailed guidelines and verify compliance; and

- Sequential decision making. When each action builds on previous ones and mistakes are costly (often found in multi-step domains).

Use the think() tool alongside other tools like this:

from inspect_ai import Task, task

from inspect_ai.scorer import includes

from inspect_ai.solver import generate, system_message, use_tools

from inspect_ai.tool import bash_session, text_editor, think

@task

def intercode_ctf():

return Task(

dataset=read_dataset(),

solver=[

system_message("system.txt"),

use_tools([

bash_session(timeout=180),

text_editor(timeout=180),

think()

]),

generate(),

],

scorer=includes(),

sandbox=("docker", "compose.yaml")

)Tool Description

In the original think tool article (which was based on experimenting with Claude) they found that providing clear instructions on when and how to use the think() tool for the particular problem domain it is being used within could sometimes be helpful. For example, here’s the prompt they used with SWE-Bench:

from textwrap import dedent

from inspect_ai import Task, task

from inspect_ai.scorer import includes

from inspect_ai.solver import generate, system_message, use_tools

from inspect_ai.tool import bash_session, text_editor, think

@task

def swe_bench():

tools = [

bash_session(timeout=180),

text_editor(timeout=180),

think(dedent("""

Use the think tool to think about something. It will not obtain

new information or make any changes to the repository, but just

log the thought. Use it when complex reasoning or brainstorming

is needed. For example, if you explore the repo and discover

the source of a bug, call this tool to brainstorm several unique

ways of fixing the bug, and assess which change(s) are likely to

be simplest and most effective. Alternatively, if you receive

some test results, call this tool to brainstorm ways to fix the

failing tests.

"""))

])

return Task(

dataset=read_dataset(),

solver=[

system_message("system.txt"),

use_tools(tools),

generate(),

),

scorer=includes(),

sandbox=("docker", "compose.yaml")

)System Prompt

In the article they also found that when tool instructions are long and/or complex, including instructions about the think() tool in the system prompt can be more effective than placing them in the tool description itself.

Here’s an example of moving the custom think() prompt into the system prompt (note that this was not done in the article’s SWE-Bench experiment, this is merely an example):

from textwrap import dedent

from inspect_ai import Task, task

from inspect_ai.scorer import includes

from inspect_ai.solver import generate, system_message, use_tools

from inspect_ai.tool import bash_session, text_editor, think

@task

def swe_bench():

think_system_message = system_message(dedent("""

Use the think tool to think about something. It will not obtain

new information or make any changes to the repository, but just

log the thought. Use it when complex reasoning or brainstorming

is needed. For example, if you explore the repo and discover

the source of a bug, call this tool to brainstorm several unique

ways of fixing the bug, and assess which change(s) are likely to

be simplest and most effective. Alternatively, if you receive

some test results, call this tool to brainstorm ways to fix the

failing tests.

"""))

return Task(

dataset=read_dataset(),

solver=[

system_message("system.txt"),

think_system_message,

use_tools([

bash_session(timeout=180),

text_editor(timeout=180),

think(),

]),

generate(),

],

scorer=includes(),

sandbox=("docker", "compose.yaml")

)Note that the effectivess of using the system prompt will vary considerably across tasks, tools, and models, so should definitely be the subject of experimentation.